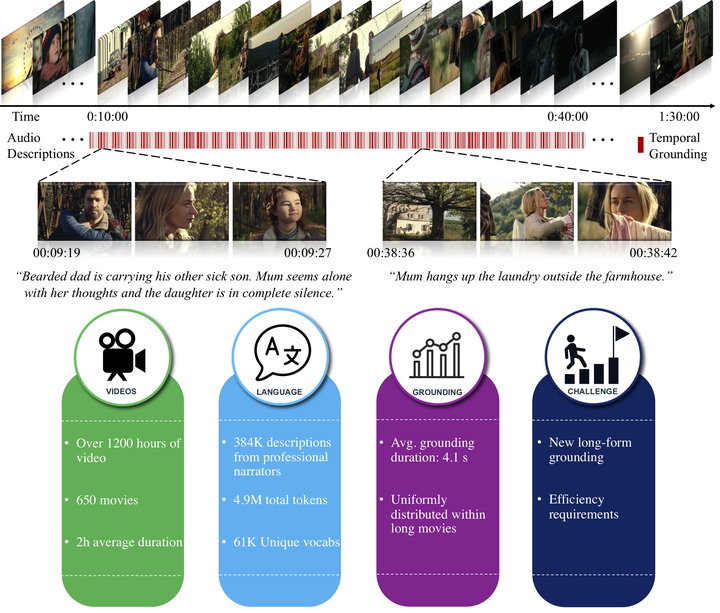

MAD: A Scalable Dataset for Language Grounding in Videos from Movie Audio Descriptions

Abstract

The recent and increasing interest in video-language research has driven the development of large-scale datasets that enable data-intensive machine learning techniques. In comparison, limited effort has been made at assessing the fitness of these datasets for the video-language grounding task. Recent works have begun to discover significant limitations in these datasets, suggesting that state-of-the-art techniques commonly overfit to hidden dataset biases. In this work, we present MAD (Movie Audio Descriptions), a novel benchmark that departs from the paradigm of augmenting existing video datasets with text annotations and focuses on crawling and aligning available audio descriptions of mainstream movies. MAD contains over 384,000 natural language sentences grounded in over 1,200 hours of video and exhibits a significant reduction in the currently diagnosed biases for video-language grounding datasets. MAD’s collection strategy enables a novel and more challenging version of video-language grounding, where short temporal moments (typically seconds long) must be accurately grounded in diverse long-form videos that can last up to three hours.

BibTex

@InProceedings{Soldan_2022_CVPR,

author = {Soldan, Mattia and Pardo, Alejandro and Alc\'azar, Juan Le\'on and Caba, Fabian and Zhao, Chen and Giancola, Silvio and Ghanem, Bernard},

title = {MAD: A Scalable Dataset for Language Grounding in Videos From Movie Audio Descriptions},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {5026-5035}

}